In the rapidly evolving landscape of AI, we often find ourselves juggling between different Large Language Models (LLMs) to get the best results for specific tasks. This creates several challenges:

- Switching between multiple web interfaces, each dedicated to a single LLM provider

- Losing conversation history when moving from one interface to another

- Difficulty in comparing responses from different LLMs side by side

- Managing multiple browser tabs and credentials

Today, I’m excited to share my experience setting up LibreChat, an open-source solution that elegantly solves these challenges.

What is LibreChat?

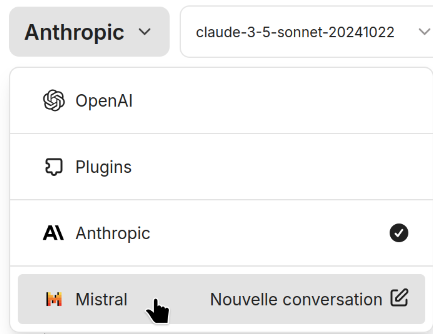

LibreChat is a free, open-source AI chat platform that acts as a unified interface for multiple AI providers. Think of it as your personal hub for AI conversations, allowing you to:

- Switch between AI models on-the-fly without disrupting your chat flow

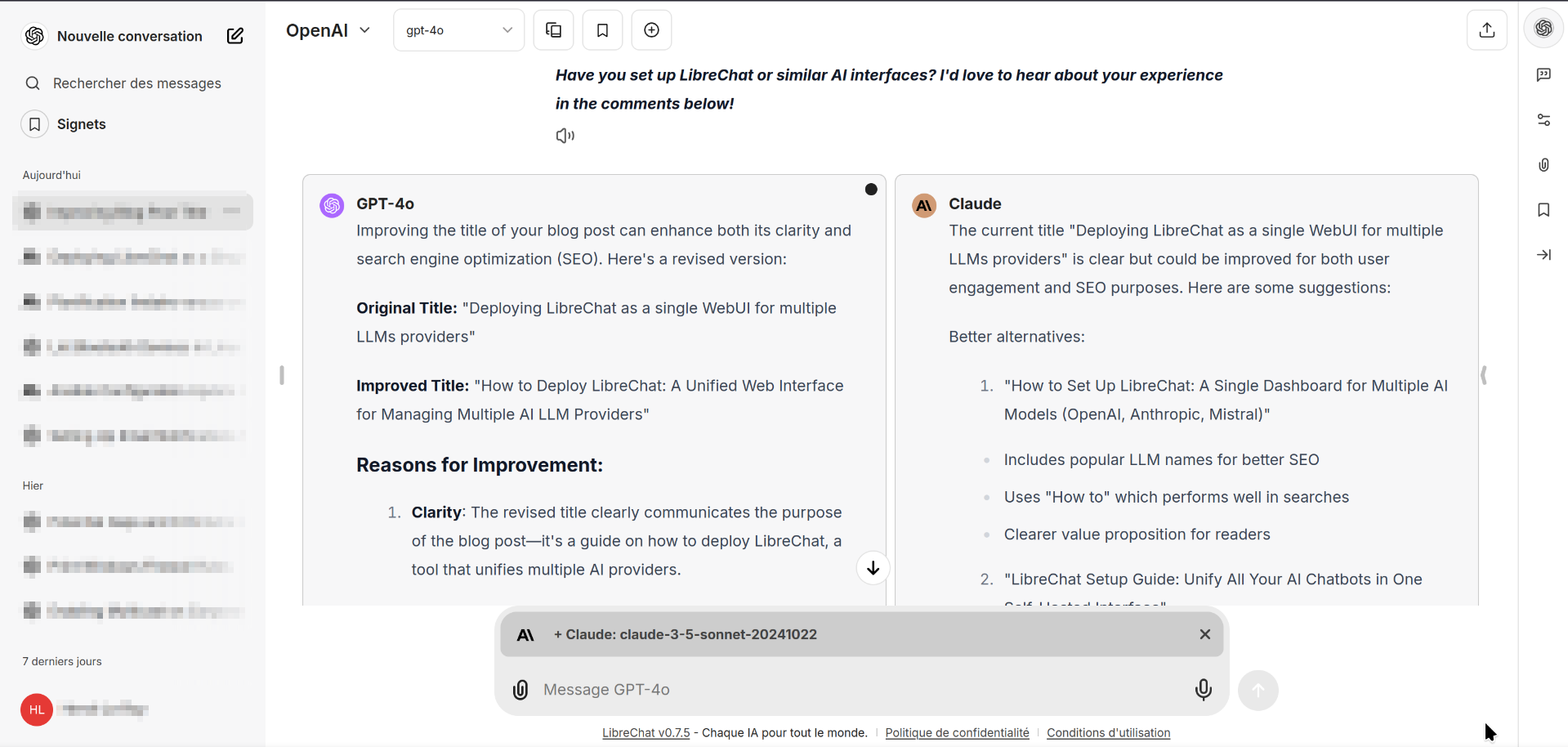

- Stream responses from multiple LLMs in parallel (similar to Chatbot Arena)

- Keep all your conversations in one place, regardless of the AI provider

- Compare different models’ responses side by side

Some additional features include:

- 🤖 Support for major AI providers (OpenAI, Anthropic, Mistral, Google, and more)

- 🔒 Self-hosted solution with privacy in mind

- 🌐 Multilingual interface

- 🖼️ Support for multimodal conversations (images, files)

Technical Setup

Docker Compose is the recommended installation method due to its simplicity, ease of use, and reliability. Refer to LibreChat website for the detailed instructions. My setup uses Docker Compose with Traefik for reverse proxy and Authelia for authentication.

Here’s how to replicate it:

- First, create a

docker-compose.override.ymlfile:

Note: this is very specific to my own setup. You need to customize according to you setup, with or without a proxy.

services:

api:

volumes:

- ./librechat.yaml:/app/librechat.yaml

networks:

- default

- net

labels:

- "traefik.enable=true"

- "traefik.http.routers.librechat.rule=Host(`librechat.hleroy.com`)"

- "traefik.http.routers.librechat.entrypoints=websecure"

- "traefik.http.routers.librechat.tls=true"

- "traefik.http.routers.librechat.tls.certresolver=letsencrypt"

- "traefik.http.routers.librechat.middlewares=authelia@docker"

- "traefik.http.services.librechat.loadbalancer.server.port=3080"

meilisearch:

environment:

- MEILI_LOG_LEVEL=WARN

mongodb:

command: mongod --noauth --quiet

networks:

net:

external: true

- Create a

librechat.yamlconfiguration file:

Note: check the custom endpoint configuration to use Mistral AI provider.

version: 1.1.5

cache: true

interface:

privacyPolicy:

externalUrl: "https://librechat.ai/privacy-policy"

openNewTab: true

termsOfService:

externalUrl: "https://librechat.ai/tos"

openNewTab: true

registration:

socialLogins: ["github", "google", "discord", "openid", "facebook"]

endpoints:

custom:

- name: "Mistral"

apiKey: "${MISTRAL_API_KEY}"

baseURL: "https://api.mistral.ai/v1"

models:

default:

[

"mistral-small-latest",

"mistral-large-latest",

"codestral-latest",

"open-codestral-mamba",

"open-mistral-nemo",

]

- Configure your secrets in

.env(refer to LibreChat website for up to date details)

Then, you are ready to run:

docker compose -f docker-compose.yml -f docker-compose.override.yml up -d --build

Conclusion

LibreChat provides an elegant solution for managing multiple AI providers through a single interface. This setup offers a secure, self-hosted environment with the flexibility to add or modify providers as needed.

Feel free to experiment with different configurations and providers to find the setup that works best for your needs. The open-source nature of LibreChat means you can always contribute back to the community or customize it further to match your requirements.

Have you set up LibreChat or similar AI interfaces? I’d love to hear about your experience in the comments below!